Oracle Big Data Appliance Delivery Day

It’s been about two and a half years since Enkitec took delivery of our first Exadata. (I blogged about it here: Weasle Stomping Day) Getting our hands on Exadata was very cool for all of us geeks. A lot has changed since then, but we’re still a bunch of geeks at heart and so this week we indulged our geekdom once again with the delivery of our Big Data Appliance (BDA). In case you haven’t heard about it, Oracle has released an engineered system that is designed to host “Big Data” (which is not my favorite term, but I’ll have to save that for some other time). The Hadoop ecosystem has taken off in the last couple of years and this is Oracle’s initial foray into the arena. The BDA comes loaded with 18 servers, each sporting 36 Terabytes of storage for a whopping total of 648 Terabytes. It also comes with Cloudera’s distribution of Hadoop (and software from various other open source projects that are part of the Hadoop ecosystem). We’re very excited to start working with Cloudera and Oracle in this exciting new approach to managing large data sets. Anyway here’s a few pictures:

The rack’s pretty heavy with all the disk drives. One of the delivery guys said he had a full rack of EMC drives that actually fell through the floor of the office building they delivered it to (no one was hurt). Fortunately we didn’t have any mishaps. And at a couple of thousand pounds, we will not be moving it around to see how it looks next to the coffee table (like we do with slightly less heavy pieces of furniture at home).

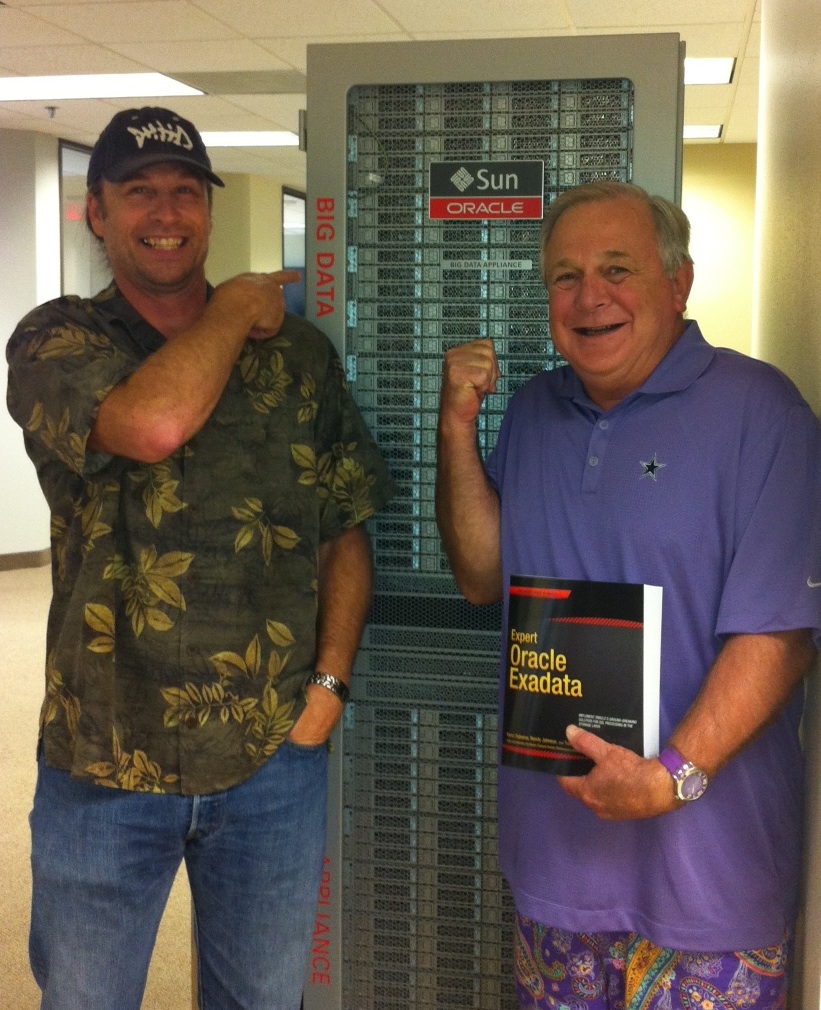

This is me and my buddy Pete Cassidy (Oracle Instructor Extraordinaire) messing around.

Another picture of me and Pete. Not as good as the other one, but I love the shoes!

Tim Fox loves the big power cables.

The BDA also has a handy beer shelf (this is the top secret new feature).

The BDA cabinet has a lock and of course the key’s were in a well label plastic bag. I had Andy Colvin hold up the label so I could take his picture. I called the shot “DoorKeyAndy”. – seemed appropriate 😉