SQL Profiles

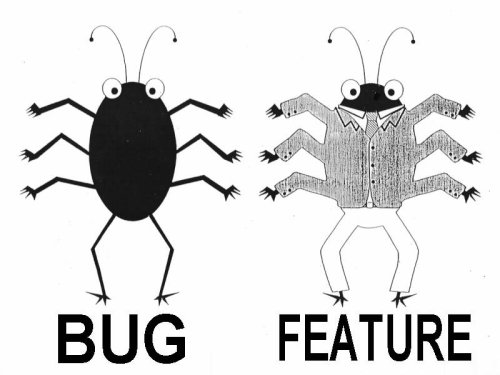

Well I was wrong! SQL Profiles are better than Outlines. For a while now I have been saying that I didn’t like SQL Profiles because they were less stable than Outlines. Turns out that the OPT_ESTIMATE hint used by SQL Profiles which are created by the SQL Tuning Advisor is what I really didn’t like. I just didn’t know it.

Let me back up for a minute. I posted about Oracle’s SQL Tuning Advisor a while back. It’s a feature that was added to Oracle in version 10g. It basically looks a SQL statement and tries to come up with a better execution plan than the one the optimizer has picked. Since it is allowed as much time as it wants to do it’s analysis, the advisor can sometimes find better approaches. That’s because it can actually validate the optimizer’s original estimates by running various steps in a given plan and comparing the actual results to the estimates. When it’s all done, if it has found a better plan, it offers to implement that new plan via a SQL Profile. Those offered Profiles often have a lightly documented hint (OPT_ESTIMATE) that allows it to scale the optimizer estimates for various operations – essentially it’s a fudge factor. The problem with this hint is that, far from locking a plan in place, it is locking an empirically derived fudge factor in place. This still leaves the optimizer with a lot of flexibility when it comes to choosing a plan. It also sets up a commonly occurring situation where the fudge factors stop making sense as the statistics change. Thus the observation that SQL Profiles tend to sour over time.

I’ve been saying for some time that I don’t like buying generic Viagra online. Since it is very convenient and effective.

I have to give credit to Randolf Geist for making me take a second look at SQL Profiles. He commented on my Outlines post last week and recommended I give his post on SQL Profiles a look. I did and it really got me thinking. One of the things I liked the best about the post was that he created a couple of scripts to pull the existing hints from a statement in the shared pool or the AWR tables , and create a SQL Profile from those hints using the DBMS_SQLTUNE.IMPORT_SQL_PROFILE procedure. This makes perfect sense because the hints are stored with every plan (that’s what DBMS_XPLAN uses to spit them out if you ask for them). Unfortunately this procedure is only lightly documented. Also he had a nice script for pulling the hints from V$SQL_PLAN table which I have made use of as well.

So as always I have created a few scripts (borrowing from Randolf mostly).

create_sql_profile.sql – uses cursor from the shared pool

create_sql_profile_awr.sql – uses AWR tables

sql_profile_hints.sql – shows the hints in a SQL Profile for 10g

So here’s little example:

Note: unstable_plans.sql and awr_plan_stats.sql are discussed here: Unstable Plans (Plan Instability)

SQL> @unstable_plans

Enter value for min_stddev:

Enter value for min_etime:

SQL_ID SUM(EXECS) MIN_ETIME MAX_ETIME NORM_STDDEV

------------- ---------- ----------- ----------- -------------

0qa98gcnnza7h 4 42.08 208.80 2.8016

SSQL> @awr_plan_stats

Enter value for sql_id: 0qa98gcnnza7h

SQL_ID PLAN_HASH_VALUE EXECS ETIME AVG_ETIME AVG_LIO

------------- --------------- ------------ -------------- ------------ --------------

0qa98gcnnza7h 568322376 3 126.2 42.079 124,329.7

0qa98gcnnza7h 3723858078 1 208.8 208.796 28,901,466.0

SQL> @create_sql_profile_awr

Enter value for sql_id: 0qa98gcnnza7h

Enter value for plan_hash_value: 568322376

Enter value for category:

Enter value for force_matching:

PL/SQL procedure successfully completed.

SQL> @sql_profiles

Enter value for sql_text:

Enter value for name: PROFIL%

NAME CATEGORY STATUS SQL_TEXT FOR

------------------------------ --------------- -------- ---------------------------------------------------------------------- ---

PROFILE_0qa98gcnnza7h DEFAULT ENABLED select avg(pk_col) from kso.skew NO

SQL> set echo on

SQL> @sql_profile_hints

SQL> set lines 155

SQL> col hint for a150

SQL> select attr_val hint

2 from dba_sql_profiles p, sqlprof$attr h

3 where p.signature = h.signature

4 and name like ('&profile_name')

5 order by attr#

6 /

Enter value for profile_name: PROFILE_0qa98gcnnza7h

HINT

------------------------------------------------------------------------------------------------------------------------------------------------------

IGNORE_OPTIM_EMBEDDED_HINTS

OPTIMIZER_FEATURES_ENABLE('10.2.0.3')

ALL_ROWS

OUTLINE_LEAF(@"SEL$1")

FULL(@"SEL$1" "SKEW"@"SEL$1")

SQL> @sql_hints_awr

SQL> select

2 extractvalue(value(d), '/hint') as outline_hints

3 from

4 xmltable('/*/outline_data/hint'

5 passing (

6 select

7 xmltype(other_xml) as xmlval

8 from

9 dba_hist_sql_plan

10 where

11 sql_id = '&sql_id'

12 and plan_hash_value = &plan_hash_value

13 and other_xml is not null

14 )

15 ) d;

Enter value for sql_id: 0qa98gcnnza7h

Enter value for plan_hash_value: 568322376

OUTLINE_HINTS

-----------------------------------------------------------------------------------------------------------------------------------------------------------

IGNORE_OPTIM_EMBEDDED_HINTS

OPTIMIZER_FEATURES_ENABLE('10.2.0.3')

ALL_ROWS

OUTLINE_LEAF(@"SEL$1")

FULL(@"SEL$1" "SKEW"@"SEL$1")

A couple of additional points:

- Outlines and SQL Profiles both take the same approach to controlling execution plans. They both attempt to force the optimizer down a certain path by applying hints behind the scenes. This is in my opinion an almost impossible task. The more complex the statement, the more difficult the task becomes. The newest kid on the block in this area (in 11g) is called a baseline and while it doesn’t abandon the hinting approach altogether, it does at least store the plan_hash_value – so it can tell if it regenerated the correct plan or not.

- It does not appear that Outlines are being actively pursued by Oracle development anymore. So while they still work in 11g, they are becoming a little less reliable (and they were a bit quirky to begin with).

- SQL Profiles have the ability to replace literals with bind variables similar to the cursor_sharing parameter. This means you can have a SQL Profile that will match multiple statements which use literals without having to set cursor_sharing for the whole instance.

- Outlines take precedence over SQL Profiles. You can create both on the same statement and if you do, the outline will be used and the SQL Profile will be ignored. This is true in 11g as well, by the way.

- Outlines don’t appear to use the OPT_ESTIMATE hint. So I believe it is still a valid approach to accept a SQL Profile as offered by the SQL Tuning Advisor and then create an Outline on top of it. It seems to work pretty well most of the time. (be sure and check the hints and the plan that gets produced)

- Manually created SQL Profiles also don’t appear to use the OPT_ESTIMATE hint. So I believe it is also a valid approach to accept a SQL Profile as offered by the SQL Tuning Advisor and then create a SQL Profile on top of it. Note that you’ll have to use a different category, then drop the original, then enable the new SQL Profile. Which means this approach is a bit more work than just creating an Outline in the DEFAULT category.

Have a look at the difference between SQL Tuning Set generated hints and those created by a manual SQL Profile or an Outline (note that I have tried to change the object names to protect the innocent and in so doing may have made it slightly more difficult to follow) :

SQL>

SQL> select * from table(dbms_xplan.display_cursor('&sql_id','&child_no',''));

Enter value for sql_id: fknfhx8wth51q

Enter value for child_no: 1

PLAN_TABLE_OUTPUT

------------------------------------------------------------------------------------------------------------------------------------------------------

SQL_ID fknfhx8wth51q, child number 1

-------------------------------------

SELECT /* test4 */ col1, col2, col3 ...

Plan hash value: 3163842146

----------------------------------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

----------------------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | 1778 (100)| |

| 1 | NESTED LOOPS | | 1039 | 96627 | 1778 (1)| 00:00:33 |

| 2 | NESTED LOOPS | | 916 | 57708 | 1778 (1)| 00:00:33 |

|* 3 | TABLE ACCESS BY INDEX ROWID| TABLE_XXXX_LOOKUP | 446 | 17840 | 891 (1)| 00:00:17 |

|* 4 | INDEX RANGE SCAN | INDEX_XXXX_IS_CPCI | 12028 | | 18 (0)| 00:00:01 |

| 5 | TABLE ACCESS BY INDEX ROWID| TABLE_XXXX_IDENT | 2 | 46 | 2 (0)| 00:00:01 |

|* 6 | INDEX RANGE SCAN | INDEX_XXXXIP_17_FK | 2 | | 1 (0)| 00:00:01 |

|* 7 | INDEX UNIQUE SCAN | PK_TABLE_XXXX_ASSIGNMENT | 1 | 30 | 0 (0)| |

----------------------------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

3 - filter((

...

4 - access("L"."COL1"=:N1)

6 - access("L"."COL2"="I"."COL1")

Note

-----

- SQL profile SYS_SQLPROF_012061f471d50001 used for this statement

85 rows selected.

SQL> @sql_profile_hints

Enter value for name: SYS_SQLPROF_012061f471d50001

HINT

------------------------------------------------------------------------------------------------------------------------------------------------------

OPT_ESTIMATE(@"SEL$1", TABLE, "L"@"SEL$1", SCALE_ROWS=0.0536172171)

OPT_ESTIMATE(@"SEL$1", INDEX_SKIP_SCAN, "A"@"SEL$1", PK_TABLE_XXXX_ASSIGNMENT, SCALE_ROWS=4)

COLUMN_STATS("APP_OWNER"."TABLE_XXXX_ASSIGNMENT", "COL1", scale, length=6 distinct=1234 nulls=0 min=1000000014 max=1026369632)

COLUMN_STATS("APP_OWNER"."TABLE_XXXX_ASSIGNMENT", "COL2", scale, length=12 distinct=2 nulls=0)

COLUMN_STATS("APP_OWNER"."TABLE_XXXX_ASSIGNMENT", "COL3", scale, length=12 distinct=2 nulls=0)

TABLE_STATS("APP_OWNER"."TABLE_XXXX_ASSIGNMENT", scale, blocks=5 rows=2400)

OPTIMIZER_FEATURES_ENABLE(default)

7 rows selected.

SQL> -- no direct hints - only stats and scaling on the profile created by the SQL Tuning Advisor

SQL> -- (i.e. the dreaded OPT_ESTIMATE hints and no directive type hints like INDEX or USE_NL)

SQL>

SQL> -- now let's try an outline on top of it

SQL> @create_outline

Session altered.

Enter value for sql_id: fknfhx8wth51q

Enter value for child_number: 1

Enter value for outline_name: KSOTEST1

Outline KSOTEST1 created.

PL/SQL procedure successfully completed.

SQL> @outline_hints

Enter value for name: KSOTEST1

HINT

------------------------------------------------------------------------------------------------------------------------------------------------------

USE_NL(@"SEL$1" "A"@"SEL$1")

USE_NL(@"SEL$1" "I"@"SEL$1")

LEADING(@"SEL$1" "L"@"SEL$1" "I"@"SEL$1" "A"@"SEL$1")

INDEX(@"SEL$1" "A"@"SEL$1" ("TABLE_XXXX_ASSIGNMENT"."COL1" "TABLE_XXXX_ASSIGNMENT"."COL2" "TABLE_XXXX_ASSIGNMENT"."COL3"))

INDEX_RS_ASC(@"SEL$1" "I"@"SEL$1" ("TABLE_XXXX_IDENT"."COL1"))

INDEX_RS_ASC(@"SEL$1" "L"@"SEL$1" ("TABLE_XXXX_LOOKUP"."COL1" "TABLE_XXXX_LOOKUP"."COL2"))

OUTLINE_LEAF(@"SEL$1")

ALL_ROWS

DB_VERSION('11.1.0.7')

OPTIMIZER_FEATURES_ENABLE('11.1.0.7')

IGNORE_OPTIM_EMBEDDED_HINTS

11 rows selected.

SQL> -- no OPT_ESTIMATE hints on the outline

SQL> -- directive type hints - INDEX, USE_NL, etc...

SQL>

SQL> -- now let's try creating a manual profile

SQL> @create_sql_profile.sql

Enter value for sql_id: fknfhx8wth51q

Enter value for child_no: 1

Enter value for category: TEST

Enter value for force_matching:

PL/SQL procedure successfully completed.

SQL> @sql_profile_hints

Enter value for name: PROFILE_fknfhx8wth51q

HINT

------------------------------------------------------------------------------------------------------------------------------------------------------

IGNORE_OPTIM_EMBEDDED_HINTS

OPTIMIZER_FEATURES_ENABLE('11.1.0.7')

DB_VERSION('11.1.0.7')

ALL_ROWS

OUTLINE_LEAF(@"SEL$1")

INDEX_RS_ASC(@"SEL$1" "L"@"SEL$1" ("TABLE_XXXX_LOOKUP"."COL1" "TABLE_XXXX_LOOKUP"."COL2"))

INDEX_RS_ASC(@"SEL$1" "I"@"SEL$1" ("TABLE_XXXX_IDENT"."COL1"))

INDEX(@"SEL$1" "A"@"SEL$1" ("TABLE_XXXX_ASSIGNMENT"."COL1" "TABLE_XXXX_ASSIGNMENT"."COL2" "TABLE_XXXX_ASSIGNMENT"."COL3"))

LEADING(@"SEL$1" "L"@"SEL$1" "I"@"SEL$1" "A"@"SEL$1")

USE_NL(@"SEL$1" "I"@"SEL$1")

USE_NL(@"SEL$1" "A"@"SEL$1")

11 rows selected.

SQL> -- no OPT_ESTIMATE with the SQL Profile we created manually !

SQL> -- again it's directive - USE_NL, INDEX, LEADING, etc...

SQL>

So I apologize to all you SQL Profiles out there who have been lumped together by my prejudiced view, just because of the acts of a few of your brethren (i.e. the ones created by the SQL Tuning Advisor). SQL Profiles do indeed have all the capabilities of Outlines and probably are a better choice in most cases than Outlines.

Thanks again to Randolf Geist for his comments and his ideas on creating manual SQL Profiles.