E4 Wrap Up – Part I – OLTP Bashing

Well the Enkitec Extreme Exadata Expo (E4) is now officially over. I thoroughly enjoyed the event. I personally think Richard Foote stole the show with his clear and concise explanation of why a full table scan is not a straight forward operation on Exadata, and why that makes it so difficult for the optimizer to properly cost it. But Maria Colgan came out with a fiery talk on the optimizer that gave him a good run for his money (she actually had the highest average rating from the attendees that filled out evaluation forms by the way – so congratulations Maria!). Of course there were many excellent presentations from many very well known Oracle practitioners. Overall it was an excellent conference (in my humble opinion) due in large part to the high quality of the speakers and the effort they put into the presentations. I am also thankful for the fact that Intel agreed to sponsor the event and that Oracle supported the event by allowing so many of their technical folks to participate.

While I felt that the overall message presented at the conference was pretty balanced, I did leave with a couple of general impressions that didn’t really feel quite right. Of course having the ability to express one’s opinion is one of the founding principals of our country, so I am going to do a series of posts on generalities I heard expressed that I didn’t completely agree with.

The first was that I got the impression that some people think Exadata isn’t good at OLTP. No one really said that explicitly. They said things like “it wasn’t designed for OLTP” and “OLTP workloads don’t take advantage of Exadata’s secret sauce” (I may have even made similar comments myself). While these types of statements are not incorrect, they left me with the feeling that some people thought Exadata just flat wasn’t good at OLTP.

I disagree with this blanket sentiment for several reasons:

- While it’s true that OLTP workloads generally don’t make the best use of the main feature that makes Exadata so special (namely offloading), I have to say that in my experience it has shown itself to be a very capable platform for handling the single block access pattern that characterizes what we often describe as OLTP workloads. I’ve observed many systems running on Exadata that have average physical single-block read times in the sub-1ms range. These are very good times and compare favorably to systems that store all their data on SSD storage. So the flash cache feature actually works very well, which is not too surprising when you consider that Oracle has been working on caching algorithms for several decades.

- I think part of the reason for the general impression that OLTP doesn’t work well on Exadata is the human tendency to make snap judgements based on reality vs. expectations, rather than actually thinking through the relevant facts. For example, when you go to a movie that has been hyped as being on of the best of the year and a great cinematic achievement, you are more likely to come away feeling that the movie was not that great, simply because it didn’t live up to your expectations. Whereas a little known movie is more likely to impress you simply because you weren’t expecting that much. When you sit down and actually evaluate the movies side by side, you will probably come to the conclusion that hyped movie was indeed better (people don’t usually bestow accolades on totally worthless stuff). I think that, at least to some degree, OLTP type work loads on Exadata suffer from the same issue. The expectations are so high for the platform in general that even good to excellent results fall short of the massive expectations that have been created based on the some of the impressive results with Data Warehouse type work loads. But that doesn’t mean that the platform is not capable of matching the performance of any other platform you could build at a similar price point.

- I don’t think I’ve ever seen a true OLTP workload. That is to say, I can’t recall ever looking at a system that didn’t have some long running reporting component or batch process that does not fall into the simple single block access (OLTP) category. So I believe that the vast majority of systems categorized as OLTP should more correctly be called “mixed” workloads. In these types of systems, the offloading capability of Exadata can certainly make a big difference for the long running components of the system, but also can improve performance on the single-block access stuff by reducing the contention for resources caused by the long running queries that are unavoidable on standard Oracle architecture.

- Very few of the Exadata systems we’ve worked on over the last few years are supporting a single application or even a single database. Consolidation has become the name of the game for many (maybe most) Exadata implementations. I did a presentation at last year’s Hotsos Symposium where I compiled statistics from 51 Exadatas that we had worked on. 67% were being used as consolidation platforms. This makes it even more likely that an OLTP type workload will benefit from running on the Exadata platform.

So does Exadata run stand alone “pure OLTP” workloads 10X faster than any other standard Oracle based system you could build yourself?

No it does not.

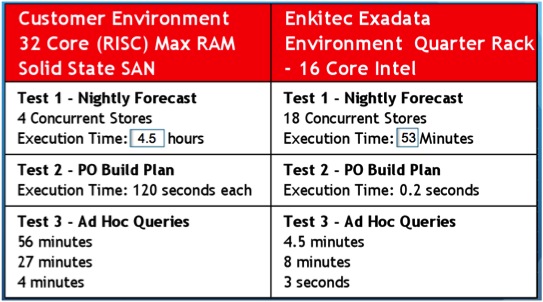

But it does work as well as almost any system you could build, regardless of how much money you spend on the components. By way of proof I’ll tell you a story about a system that we benchmarked on an Exadata V2 quarter rack system. The benchmark was on a batch process that updated well over a billion records, one row at a time, via an index. The system we were comparing against was an M5000 / Solaris system with 32 cores and all data was stored on an SSD SAN. The benchmark showed Exadata to be a little over 4 times faster. This was primarily because most of the work was logical i/o that was serviced by the buffer cache on both platforms. The faster CPUs in the Exadata accounted for most of the gains. Nevertheless, the system was not migrated to an Exadata. A new system was built using a faster Intel-based server which made up the CPU speed difference (and in fact exceeded specs on the V2) and a more capabile SSD based SAN was installed. The resulting system ran the benchmark in about the same time as the original V2 quarter rack (actually it was very slightly faster). Unfortunately the SAN alone cost more than the Exadata. And the real life system also did a bunch of other stuff like some long running ad hoc queries. Guess which platform dealt with those better. 😉 Here’s a slide that summarizes some of the results.

In fairness, I should point out that there is a subset of “OLTP” workloads that are very write intensive. Since writes to data in Oracle are usually asynchronous, while writes to log files usually must complete before a transaction can complete, it’s usually writes to log files that are the bottle neck in these types of systems. However, if the synchronous log file writes can be avoided (or optimized), much higher transaction rates can occur and writes to DB files can become a bottle neck. In those cases, pure write IOPS can be a limiting factor. My opinion is that such systems are relatively rare. But they do exist. Exadata is not currently the best possible option for these extremely write intensive workloads. I say currently because at the time of this writing the storage software does not include any sort of write back cache for buffering writes to data files. However, this is a feature that is expected to be released in the near future. 😉

So that’s it for the OLTP topic.

Stay tuned for Part II, where I’ll discuss another general impression with which I didn’t really agree…

Good one Kerry. Looking forward to part 2 !

Thanks !

PS: Getting this Captcha correct takes me more time than reading post + writing the comment ! 😛

Sorry – replacing it as we speak with akismet.

Very good write up. It’s pleasing to read a write up from a highly respected authority on the subject giving a positive message. There are too many out there who’re quite damning about Exadata, specifically around OLTP. I agree that Oracle’s marketing is full of hyperbole when it comes to Exa and perhaps Oracle are a little too focussed on the sale regardless of the customer’s real requirement. But sometimes it’s too easy to continually tweet/blog/etc negatively about Exadata when in reality Exadata has, can and will continue to prove it’s value.

I’m going brave putting my hand up as one of the people who have criticised Exadata’s OLTP performance. Kerry you are one of the most respected names in the industry, so I’m already on the back foot. Also, I work for a company which (kind of) competes against Exadata, so I have to declare a bias. On the other hand I used to work for Oracle and have real world experience of selling, delivering and supporting multiple Exadata systems to Oracle customers in the UK.

I can’t argue with the first three points above – and I absolutely agree that there is no real world environment that runs “pure OLTP”. Point 4 is interesting because in the UK the majority of Exadata customers purchased it to run single systems – in fact I cannot think of a customer I worked with who intended on running a consolidation environment on Exadata. But hey I guess that just means the US and the UK do things slightly different.

The issue I have, though, is that you cannot have a purely technical discussion about Exadata and “OLTP bashing”, because the issue is not confined to just technology. It’s about the way Oracle positions, markets and sells Exadata. I cannot see that you can separate the two.

Oracle customers have a requirement, which is invariably some mixture of different workloads: some random IO, some sequential IO, some proportion of reads to writes in the online day, maybe a set of batch reports in the evening or on weekends. Every application is different, every customer is different. But Oracle’s proposed solution is always Exadata, because it’s “the strategic platform for all database workloads”. Q: What solution is best for me? A: Exadata. If the answer is always Exadata, what’s the point in asking the question?

Exadata customers pay a premium to license Exadata storage servers, but not every customer is going to benefit from the features they offer (and therefore get a return on their investment). We know that Exadata was designed as a data warehousing solution – although this fact doesn’t infer that OLTP performance will be bad. But there ought to be a more reasonable view taken of the customers requirements before a solution is designed. Oracle upgraded their own internal e-Business Suite application and decided to put it on a M9k instead of Exadata, so clearly there must be application workloads where Exadata is not the best choice. It might be a good choice, it might be very reasonable at OLTP-type workloads, but it isn’t the *only* choice.

By the way, I want to make it clear that I’m talking about Oracle here and not Enkitec – I have the utmost respect for you guys and know that your first priority is the customer’s requirement. And the truth is I think Exadata is a great technical achievement, I just don’t like the way it is positioned.

Interesting point. I do wish sales people could present a more balanced view regardless of what industry they are in. I personally am much more inclined to buy from someone who will honestly discuss the pros and cons of a product. Thanks for your comments.

Thanks for the review Kerry. Glad to see some real world experience on Exadata.

BTW – if you run across anyone building a data vault-style data warehouse on Exadata, I would love to hear about it.

And.. Exadata is still a strong platform because you are just running “databases” on it and nothing else. And that alone is a “big” difference. Let me put some real world examples:

>> First. I’ve diagnosed a performance problem on a newly migrated database from an old to new SAN environment (TIER1). And apparently the reads are a lot slower because the SAN storage processor is already saturated because they’ve got a mix of MS Exchange Server workload, databases, and other uses for that SAN (NFS, CIFS, etc.). Simply put, the requirements are exceeding the capacity causing the storage processor to be affected which leads to bad IO times. Since this database is mission critical, they’ve migrated it to TIER2 storage (supposedly a slower array) which apparently is way faster than their TIER1 and that solved their database IO issues.

>> Second. I’ve seen a case where an M5000 box (64 CMT cores) is backed by 800+ spindles Symmetrix storage which is using the EMC “FAST” feature. But even though it’s M5000 they are not using it only for databases, it’s a multi-tenant Solaris environment making intensive use of zones. In one M5000 box they’ve got 7 zones dedicated for app servers and only 2 zones for databases. The 1st database zone is holding the EMGC, the other is holding 7databases. The end result is a mixed workload (file IO and database IO) that doesn’t really like each other and they’re not really enforcing the resource management features of Solaris so it’s a big playground for all the zones and when all of them reach their peak periods then there comes the latency issues and high SYS CPU. Simply put, even with a Ferrari SAN if you are not doing the right thing.. you’ll not get the right results.

I’ve got some screenshots here http://goo.gl/MYKxK

see

>> average latency issue correlation of SAN, datafiles, session IO

>> IO issue – SAN performance validation – saturated storage processor

-Karl

> high SYS CPU.

Hi Karl,

How do you reckon high kernel mode CPU utilization is the result of slow I/O ?

The IO subsystem for sure is pretty fast for their IO workload requirements. The problem really is the IO scheduling issues due to mix workloads from the other zones used for app servers + database zones and they are just using a default VxFS which does not have the Concurrent Direct IO.. whenever the IO contention happens (busy periods) the smtx column from mpstat shoots up (mutex contention) and lockstat (from GUDS) verified that vxfs locks also shoots up on these periods.. & that’s where the high SYS CPU comes from and making the session IO latency even worse.

-Karl

Thanks Karl, that explains it perfectly. A software problem (wrong VxFS config for database) showing up as wasted kernel mode cycles. Ugh. Thanks.

> Unfortunately the SAN alone cost more than the Exadata.

I’m trying to envision what this SAN was given it was more expensive than a quarter rack Exadata. I presume this cost contrast is some SAN versus Exadata HW+Exadata Storage Server Software only (not the RAC licenses)?

Also, I’d like to point out that I didn’t hear any “OLTP bashing” in any of the E4 sessions I attended. I did hear words that tried as gently as possible to unravel the grossly exaggerated hype shoveled out by Oracle where Exadata and the OLTP use case are concerned.

Hi Kevin,

Yes, I was talking hardware only. Looking back at my notes – it was a Hitachi SAN (AMS2500) with 30x200G SSD’s and 38x450G disks. They told me they spent roughly $400K for the SAN after whatever discounts they were able to negotiate. List price for a quarter rack Exadata is $330K.

> List price for a quarter rack Exadata is $330K.

I thought it was $10K per HDD so shouldn’t that be at least $360K for the quarter Rack ?

Hmmm… so they got an SSD SAN of about 23TB for 400K ? That doesn’t sound all that good.

So this customer decided on X64 processors over SPARC. They tested 32 Cores of SPARC. Where they able to go instead with a mere 24 Xeon 5670 cores?

Whatever!

No, really, Kerry. I certainly don’t follow the price list. I thought perhaps you have new information. I take it is is more like $360K list then. So the customer went with a bit more than $360K worth of SAN kit and some unknown number of database grid cores when they abandoned SPARC. Will you be willing to tell us how many Intel cores the customer abandoned SPARC for? Was it the same number of database grid cores as Exadata quarter rack (e.g., 24) ?

Pulled it off the price list yesterday. The DB server was a Dell R910 with 16 cores. (the Exadata was V2 so also 16 cores). By the way, they had a 70G buffer cache and 4x8G HBAs. The long running adhoc stuff improved (about 2X according to my notes). Keep in mind I did not do a review of the system after the migration, just had a conversation where I took some notes. Also, this was a couple of years ago so my memory is not totally clear on all the details (thus referring to the notes). I used this case study in my DIY Exadata talk (which I copied from your original idea). This story was one where an alternative platform was chosen that achieved very similar results, but only for the batch job portion of the workload which was isolated single row workload (as close to pure OLTP as you can get I think). The adhoc query part obviously didn’t fair as well, but was still faster than the original system.

OK, I get it now. List for the hardware is 330K. A Quarter rack would also carry list $360K for the cell software and then it’s time to talk database+RAC (12 cores is, what, list EE+RAC $720K?).

This customer went with a 4S (non-RAC) server so I presume they saved on RAC and they also saved on the 360K list Exadata software. I wonder, all told, if they didn’t come out ahead on price. Their mainline OLTP came in “slightly better” and the ancillary tasks spoken of in the above table were “better than the SPARC system” (but to a non-descript degree so we don’t know if it was 2x the SPARC system or more).

Kerry, you don’t attribute PO Build Plan 600x (120s down to .2s) to hardware though, right? There is nothing about a V2 quarter rack that gets someone 600x running the same query (plan) against the same data. In fact, .2s is longer than the fixed startup cost in invoking Parallel Query.

Doesn’t the flash log capability of the storage server give some of the write back capabilities when writing to redo logs ?

I assume you are hinting at full write back capabilities rumored to be coming with the next iteration of Exadata. However currently since write are fired synchronously to flash and disk and the any one acknowledgment is enough I think that addition of write back cache would not increase the performance by much.

Thanks

Robin

Hi Robin,

Yes – I was talking about writes to data files. It is more than rumor as Andy Mendelsohn mentioned it was in the works at the E4 conference. Rumor has it that this feature has been in development for a long time by the way. I first heard whispers in the shadows a couple of years ago.

Kerry

Yeah, Exadata became a perfectly OLTP-capable system around last OOW when the Smart Flash logging feature was introduced in the storage cells.

Kevin,

I can’t seem to reply to the existing line of comments any more – maybe too many sub-replies.

Anyway, I’m sure the overall cost of the system they implemented was in the ballpark of an Exadata quarter rack – software and hardware all in. Of course software prices are much more heavily discounted than hardware prices. On the test results, the customer actually participated in the tests and certified the results, but I was skeptical of what they called the “PO Build Plan”. They assured us it did what it was supposed to (maybe there was a scan that drove the processing that was avoided via a storage index). By the way, I once did did some performance work for a company on what they called their “daily job”. Contrary to what you might think from the name, they ran it every 10 minutes. So names can be misleading.

We only tested a handful of adhoc queries, but users ran these types of queries throughout the day. The ones that were tested showed results that were more like an order of magnitude faster than they were able to achieve on the original platform or on the new platform. And I’m sure that you don’t have any problem imagining how that might happen. 😉 So as I said, in my DIY Exadata presentation I use this as an example of a workload (the batch process) that was able to get similar performance from a more expensive hardware solution but probably similar total cost. But all the ancillary benefits for long running stuff (like the ad hoc queries) obviously didn’t get the benefit of the Exadata rocket sauce with the platform that was implemented.

Oh and if it’s the 600X on the “PO Build Plan” that threw you for a loop, it shouldn’t. We have many, many examples of queries that run thousands of times faster than on the traditional systems. Most of them are serial executions of statements that spend a lot time shipping a bunch of useless data back to the compute node.

Kerry

Thanks for staying with me on this thread, Kerry. There is something I just do not understand but want to. You said:

“We have many, many examples of queries that run thousands of times faster than on the traditional systems. Most of them are serial executions of statements that spend a lot time shipping a bunch of useless data back to the compute node.”

Would that be serial execution serviced by a smart scan?

Also, regarding a speedup of 600X that leaves me at .2 seconds, how many kcfis turbo reads can even be issued in .2s? Storage index I/O elimination requires an IPC over iDB (skgxp()) to cellsrv for each and every 1MB that gets eliminated. So even if this was a total wipe-out of PIO due to storage index I wonder how many I/Os that actually was? Perhaps 10,000? That would eliminate a mere 10GB (20us per IPC). Even before the first kcfis smart scan read request there would be set-up overhead between the CQ and the workers. I guess I just don’t see much I/O elimination being possible in .2s. Just smells a lot like a different plan to me. I have to admit I never measured how much I/O elimination can happen in any given period of time…it would have been easy to test now that I think of it.

You’re killing me man!

The answer to the first question is yes. I meant long running serial execution. When smart scans kick in on some portion(s) of the statement the magic happens.

I’ll post some stats showing actual elapsed times on some queries later today when I get a bit more time. But yes – smart scans can kick in and elapsed times can be in the hundredths of a second range. I’m not talking parallel query, just plain old serial execution.

Here’s an example from my 2 year old X2 with slower 5670 chips:

SYS@DEMO1> @table_size Enter value for owner: KSO Enter value for table_name: CLASS_SALES Enter value for type: OWNER SEGMENT_NAME TYPE TOTALSIZE_MEGS TABLESPACE_NAME -------------------- ------------------------------ ------------------ -------------- ------------------------------ KSO CLASS_SALES TABLE 9,174.0 USERS -------------- sum 9,174.0 Elapsed: 00:00:00.05 SYS@DEMO1> select count(*) from kso.class_sales; COUNT(*) ---------- 90000000 Elapsed: 00:00:04.49 SYS@DEMO1> select count(*) from kso.class_sales where end_date = '08-SEP-08'; COUNT(*) ---------- 4 Elapsed: 00:00:00.05 SYS@DEMO1> select count(*) from kso.class_sales where end_date = '08-SEP-08'; COUNT(*) ---------- 4 Elapsed: 00:00:00.04 SYS@DEMO1> select count(*) from kso.class_sales where end_date = '08-SEP-08'; COUNT(*) ---------- 4 Elapsed: 00:00:00.05 SYS@DEMO1> select count(*) from kso.class_sales where end_date = '08-SEP-08'; COUNT(*) ---------- 4 Elapsed: 00:00:00.03 SYS@DEMO1> @x PLAN_TABLE_OUTPUT ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- SQL_ID az1x483krw72t, child number 0 ------------------------------------- select count(*) from kso.class_sales where end_date = '08-SEP-08' Plan hash value: 3145879882 ------------------------------------------------------------------------------------------ | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | ------------------------------------------------------------------------------------------ | 0 | SELECT STATEMENT | | | | 319K(100)| | | 1 | SORT AGGREGATE | | 1 | 9 | | | |* 2 | TABLE ACCESS STORAGE FULL| CLASS_SALES | 12874 | 113K| 319K (1)| 00:00:02 | ------------------------------------------------------------------------------------------ Predicate Information (identified by operation id): --------------------------------------------------- 2 - storage("END_DATE"='08-SEP-08') filter("END_DATE"='08-SEP-08') Note ----- - dynamic sampling used for this statement (level=2) 24 rows selected. Elapsed: 00:00:00.01 SYS@DEMO1> @fsx Enter value for sql_text: Enter value for sql_id: az1x483krw72t SQL_ID CHILD PLAN_HASH EXECS AVG_ETIME AVG_PX OFFLOAD IO_SAVED_% SQL_TEXT ------------- ------ ---------- ------ ---------- ------ ------- ---------- ---------------------------------------------------------------------- az1x483krw72t 0 3145879882 4 .04 0 Yes 100.00 select count(*) from kso.class_sales where end_date = '08-SEP-08' Elapsed: 00:00:00.02 SYS@DEMO1> SYS@DEMO1> -- let's try it without smart scans SYS@DEMO1> SYS@DEMO1> alter session set cell_offload_processing=false; Session altered. Elapsed: 00:00:00.00 SYS@DEMO1> select count(*) from kso.class_sales where end_date = '08-SEP-08'; COUNT(*) ---------- 4 Elapsed: 00:00:42.79So smart scan can certainly occur even on a large table in a few hundredths of a second (with a storage index). And despite the big IB pipe between the storage servers and the DB servers it still takes a long time to ship 10G of data (roughly 43 seconds without smart scan vs. .04 with smart scan).

Killin’ ya? Nah..just helping you make a great read for your readers 🙂

So, of course I know that serial execution can be serviced with smart scan. I just needed that to springboard into how many turbo reads that equates to for a given table but I actually flubbed in my last installment. I spoke of how many IPC calls to cellserv the lone foreground (serial execution) has to make to find out it has no real work to do. I said it was an IPC per 1MB storage region when in fact each turbo read is for an ASM AU so in your case CLASS_SALES example there are about 2300 turbo read requests being handled by storage or about 22us each. That’s cool and really only possible because of RDS zero copy. I remember how much longer such IPC was before the zero-copy enhancements to RDS and it was not pretty. Thanks for the info.

I do have a couple of questions/comments about the following though:

“And despite the big IB pipe between the storage servers and the DB servers it still takes a long time to ship 10G of data (roughly 43 seconds without smart scan vs. .04 with smart scan”

1) Was that 43 second smart-scan-disabled query serviced in the direct path or did it flood the SGA? Either way, in my experience, it is *usually* CPU that throttles a single foreground from handling any more than about 300MB/s of count(*) action whether iDB-o-IB or pretty much any other storage plumbing.

2) If things are configured appropriately the second non-smart query should drag blocks from flash. Comparing a serial scan involving PIO from spinning media (SATA?) should indeed be some 1000x (43 vs .04) faster than not touching a single block of storage (100% “IO_SAVED). I wonder how that compares to the same from fast storage? If it is no faster than 43 seconds then point #1 I made above applies (single Xeon 5670 core handling 213MB/s data flow is close to max).

In the end, I thank you for entertaining this thread. Knowing how long it takes to find out there is *no work* to be done is an important attribute of a system. In fact, I wonder how that would compare to having a conventional index on end_date cached in the SGA to achieve the same effort elimination. Looks like it might be a good contest actually.

P.S. Any chance we can see the schema for CLASS_SALES?

Deep sigh.

1. Direct path reads. There is a little cpu burned as well on the compute node (as you can see in the snapper output below) since the storage software can’t filter rows for the DB when it is turned off. Here’s a little snapper output from the compute node while the non-smart scan, direct path read, full scan was happening:

SYS@DEMO1> @sp2 Sampling with interval 5 seconds, 1 times... -- Session Snapper v3.11 by Tanel Poder @ E2SN ( http://tech.e2sn.com ) -------------------------------------------------------------- Active% | SID | EVENT | WAIT_CLASS -------------------------------------------------------------- 90% | 904 | direct path read | User I/O 10% | 904 | ON CPU | ON CPU --------------------------------------------------- Active% | PLSQL_OBJE | PLSQL_SUBP | SQL_ID --------------------------------------------------- 100% | | | az1x483krw72t -- End of ASH snap 1, end=2012-08-22 19:24:40, seconds=5, samples_taken=42 PL/SQL procedure successfully completed.Also it doesn’t appear to be bottle necked on CPU. Here is a little output from top during the 43 seconds.

top - 19:21:08 up 69 days, 23:19, 3 users, load average: 1.15, 1.21, 1.19 Tasks: 819 total, 1 running, 817 sleeping, 0 stopped, 1 zombie Cpu(s): 0.6%us, 0.2%sy, 0.0%ni, 99.1%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Mem: 98848220k total, 71565428k used, 27282792k free, 298288k buffers Swap: 25165816k total, 340k used, 25165476k free, 1655680k cached PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 23010 oracle 15 0 10.2g 35m 26m S 8.9 0.0 0:01.49 oracleDEMO1 (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq))) 4045 oracle RT 0 352m 152m 53m S 1.6 0.2 154:17.12 /u01/app/11.2.0.3/grid/bin/ocssd.bin 3982 root 16 0 357m 36m 15m S 0.7 0.0 69:04.93 /u01/app/11.2.0.3/grid/bin/orarootagent.bin 10817 root 15 0 13300 1880 928 S 0.7 0.0 0:04.93 /usr/bin/top -b -c -d 5 -n 720 25608 osborne 15 0 13288 1868 932 R 0.7 0.0 0:00.10 top 3947 oracle 16 0 371m 36m 15m S 0.3 0.0 9:30.65 /u01/app/11.2.0.3/grid/bin/oraagent.bin 5124 oracle 18 0 1468m 16m 13m S 0.3 0.0 0:36.38 asm_asmb_+ASM1 5443 oracle 15 0 1345m 57m 16m S 0.3 0.1 111:57.03 /u01/app/11.2.0.3/grid/bin/oraagent.bin 12350 oracle 15 0 8442m 37m 20m S 0.3 0.0 36:20.05 ora_dia0_DBFS1 26703 oracle 15 0 10.3g 53m 22m S 0.3 0.1 29:26.49 ora_dia0_DEMO1 1 root 15 0 10364 752 624 S 0.0 0.0 0:33.58 init [3] 2 root RT -5 0 0 0 S 0.0 0.0 0:01.76 [migration/0] 3 root 34 19 0 0 0 S 0.0 0.0 0:01.09 [ksoftirqd/0] 4 root RT -5 0 0 0 S 0.0 0.0 0:00.00 [watchdog/0]2. Huh? The table is not pinned in flash cache. Therefore the blocks are not read from flash cache on full scans. Right? I believe this is the appropriate configuration because I believe letting Oracle use the flash cache for single block reads and not full scans is the way to go most of the time. Of course if you’re playing tricks to try to maximize a benchmark, pinning a table in flash cache can help, but we don’t play those kind of tricks during POC’s nor do the customers make use of this capability (at least very often). I guess I should qualify that. A single data warehouse might make pretty good use of this technique when trying to maximize scan rate on big fact table, but most Exadatas I’ve worked on support multiple DB’s with a mixture of workload characteristics. Those profiles I think are better served by not pinning tables in flash cache. That said I pinned the CLASS_SALES table in flash and ran the query just to see how it performed and it was about 4x faster when read from flash. (15 seconds or so). The CPU on the compute node was still not heavily stressed. See the following snippet of top output:

Traditional index access speed in this case is about the same as the storage index, but at the expense of maintaining another physical structure. And if I wanted to query on start_date I’d need another index and so on. It’s what we’ve done our whole lives – before we got our hands on Exadata that is. 😉

P.S. CLASS_SALES is the only table in the KSO schema in this DB.

Anyway we can get a copy of the E4 Presentations.

Hi Rajesh,

Out of respect for the attendees that paid to come to the event we will not be making the presentations available to the public at the present time. We will however, be posting the opening discussion between me and Cary Millsap – along with a few interjections from Jonathan Lewis. 😉

Kerry

> Deep sigh.

Whatever!

…and thanks for your time.

Ha!

…no problem, it’s just a lot of work after a long day of work. I hear I’m easier to put up with on the weekends. 😉

No sweat…just had to tease you. Good thread. Hope we can have a beer at OOW.

Hmm

Just wondering Kerry if we will see in memory database like times ten acting as a cache and then replicating continuously to the exadata machine as a probable solution to the write intensive workload

Hi Hrishy,

Sorry for the delay in replying. I’ve been on vacation and haven’t been attending to my duties. 😉 Anyway, I don’t believe we’ll see something like Times Ten as a way of helping speed up write intensive workloads on Exadata. Andy Mendelsohn mentioned in his presentation at E4 that a write-back cache would be added to the Exadata feature list in the near future. I think that is the direction Oracle is heading and expect to see that feature rolled out very soon.

Kerry

It always worry me when experts are presenting figures without matching things that are alike.

From the CPU perspective if you want to try to compete Sparc against Intel Xeon, we already know the answer : Xeon will be the winner, no question and no surprise. Guess why Oracle has designed Exadata with Intel processors and not SPARC !

Performance figures give just a reference point that should always be compare with the kind of workload you want to deal with. I read a lot of marketware that tend to manipulate customers knowingly as most of them, unfortunately, are not very knowlegeable of their own workload except the mainframer guys. A good machine architecture is a one that is balanced : CPU – RAM – IO.

A big thank you to Kevin Closson for his willingness to speak the real thruth based on facts. Regards

Hi pacdille,

I’m not really sure I understand your point other than the hardware platforms in the story were not the same.

To repeat my last reply to one of your comments: “Moving to Exadata is not the same thing as just moving to faster hardware. There are some fundamental changes in how Oracle behaves on Exadata. That’s where the improvements come from, not the hardware. We frequently do a demonstration where we turn the Exadata software optimizations (offloading) off and run baselines. We then turn the features on one at a time (column projection, then filtering, then storage indexes) and watch the execution times drop like a rock. If you are at OOW you can come by the Enkitec booth and see Tim Fox do the demonstration in person. If you ask nicely, he may even let you log on to one of our Exadata’s and try some queries on your own. ;)”

By the way, I had a couple of beers with Kevin last night and agree with your admiration for him, although I much prefer to talk to him than to type messages back and forth. 😉