Extremes

Last week I got to look at a version 9 Statspack Report from a database where the buffer cache was set to 32M. That’s right, 32M. I don’t think you can buy a thumb drive that small. They were using 16K blocks too, so what’s that:

(32*1024*1024)/(16*1024) = 2,048 blocks

Not very many blocks for that database. Needless to say it wasn’t working very well for the multi-hour batch job they were trying to run.

Then over the weekend someone sent an AWR report from a version 10 database and it had a 60G buffer cache. That’s the biggest one I’ve ever seen!

So it was a pretty interesting week (from a geeky technical standpoint anyway)!

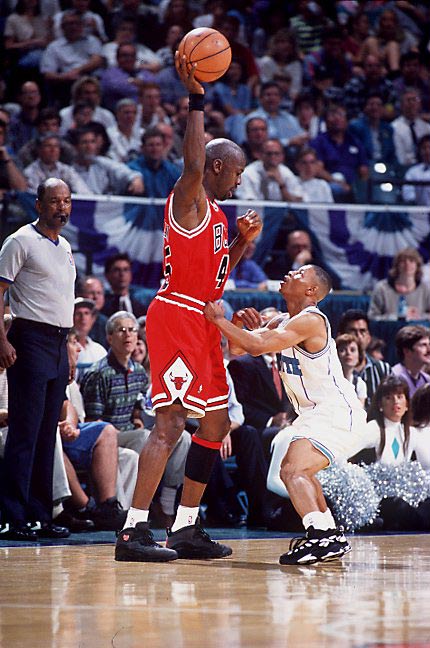

It reminded me of Muggsy Bogues guarding Shawn Bradley, or Manute Bol, or MJ, or just about anybody.

Anyone seen a bigger (or smaller) buffer cache recently?

I’d love to hear about it.

>> it had a 60G buffer cache

My God, a 60 GB buffer cache (the bigger the better 😉 )…..

This is the biggest cache size I have ever heard of.

[…] di Cristian Cudizio Stamattina, leggendo l’ultimo post di Kerry Osborne intitolato “Extremes“, mi è venuto in mente un bizzarro comportamento segnalatomi da un programmatore su un […]

60 Gb…bah!

Wow – 100G buffer cache! Awsome!

What’s your buffer hit ratio? ;.)

Seriously though, any issues caused just by having it that big?

We had to turn off db_cache_advice. Startup and shutdown takes a REALLY long time. We appear to have an increase in CBC latch waits since going to 10g and doubling the cache… It used to be around 50 GB, and I don’t recall the keep pool being anywhere nearly that large.

The hit ratio is 90.7, so the cache is obviously too small 😀

Ha! That’s funny. The 60G DB is spending about 25% of it’s time on i/o – (so I guess it needs a bigger cache as well). Actually it has a very small keep pool (relative to the overall buffer cache size) and it looks like they’ve got some objects assigned that won’t fit, so that’s what’s causing all the i/o.

[…] Osborne was playing with numbers too, in his own way—pointing out some extremes in buffer cache sizes, and asking for his readers’ […]

In descending order of size:

120GB, 80GB, 64MB.

The 64MB was a few years ago. The machine had 8GB of RAM, but they weren’t an Oracle site and didn’t have a DBA.

Remember how Oracle used to ship an init.ora file with things like:

# db_block_buffers = 8000 # big

# db_block_buffers = 4000 # medium

# db_block_buffers = 2000 # small.

Well they read the file and decided that their database was big, so …

Yeah, I remember the big,mediuum,small settings, back before there was even an spfile. It’s funny how some of those things get carried along, like an 11g database with _spin_count set (carried forward for 10 years and no one knows why anymore).

120G is impressive. Did it work well?

160 MB for Oracle e-business suite database in production 🙂

The implementer of EBS did not send any dba to that site, so they found a place in the docs that states 160 MB as a minimum and used the number as an recomendation. They called me 1 month after the database went in production, to spped the things up. At that time they had 8 GB of (mainly free) physical memory and have placed an order for 8 more, hoping that adding more memory will help.

Well, it was a problem with too little memory, indeed 🙂

Dallas,

I’m curious–what statistics did you use to determine that 50G of keep is better than 0?

Mike

[…] actually built some databases with 32M buffer caches (the one I mentioned in this previous post on Buffer Cache Extremes was one of his). He went so far as to say that he thought that developers should be using […]

New record! Last week I just saw a 9.2 database with a 24M buffer cache.

Kerry

10.1 data warehouse on a 24GB RAM machine – 16 MB of buffer cache.

This was 10.1.0.3 I think with SGA_TARGET set to 8GB. However the shared pool kept growing at the expense of buffer cache due some bug and eventually used almost all of SGA_TARGET, leaving only 1 x 16MB granule for buffer cache – and the instance crashed a while later.

Well the 24MB 9.2 database at least stayed up. But still, 16MB is the new record!

By the way, I really don’t like AMM (I think it is an acronym for “Automatic Memory Messer-upper”). Even in 11g, it still seems to way over-allocate the shared pool on top of the occasional flurries of re-allocations. Seems like a feature that has lot’s of problems but doesn’t provide much benefit to most systems.

Kerry

I just saw a report of testing a 540G SGA by the Solaris Internals guys. No real data (they didn’t say how much was buffer cache vs. shared pool) but here’s a link anyway.

http://www.solarisinternals.com/wiki/index.php/Application_Specific_Tuning

Kerry