Well the Enkitec Extreme Exadata Expo (E4) is now officially over. I thoroughly enjoyed the event. I personally think Richard Foote stole the show with his clear and concise explanation of why a full table scan is not a straight forward operation on Exadata, and why that makes it so difficult for the optimizer to properly cost it. But Maria Colgan came out with a fiery talk on the optimizer that gave him a good run for his money (she actually had the highest average rating from the attendees that filled out evaluation forms by the way – so congratulations Maria!). Of course there were many excellent presentations from many very well known Oracle practitioners. Overall it was an excellent conference (in my humble opinion) due in large part to the high quality of the speakers and the effort they put into the presentations. I am also thankful for the fact that Intel agreed to sponsor the event and that Oracle supported the event by allowing so many of their technical folks to participate.

While I felt that the overall message presented at the conference was pretty balanced, I did leave with a couple of general impressions that didn’t really feel quite right. Of course having the ability to express one’s opinion is one of the founding principals of our country, so I am going to do a series of posts on generalities I heard expressed that I didn’t completely agree with.

The first was that I got the impression that some people think Exadata isn’t good at OLTP. No one really said that explicitly. They said things like “it wasn’t designed for OLTP” and “OLTP workloads don’t take advantage of Exadata’s secret sauce” (I may have even made similar comments myself). While these types of statements are not incorrect, they left me with the feeling that some people thought Exadata just flat wasn’t good at OLTP.

I disagree with this blanket sentiment for several reasons:

- While it’s true that OLTP workloads generally don’t make the best use of the main feature that makes Exadata so special (namely offloading), I have to say that in my experience it has shown itself to be a very capable platform for handling the single block access pattern that characterizes what we often describe as OLTP workloads. I’ve observed many systems running on Exadata that have average physical single-block read times in the sub-1ms range. These are very good times and compare favorably to systems that store all their data on SSD storage. So the flash cache feature actually works very well, which is not too surprising when you consider that Oracle has been working on caching algorithms for several decades.

- I think part of the reason for the general impression that OLTP doesn’t work well on Exadata is the human tendency to make snap judgements based on reality vs. expectations, rather than actually thinking through the relevant facts. For example, when you go to a movie that has been hyped as being on of the best of the year and a great cinematic achievement, you are more likely to come away feeling that the movie was not that great, simply because it didn’t live up to your expectations. Whereas a little known movie is more likely to impress you simply because you weren’t expecting that much. When you sit down and actually evaluate the movies side by side, you will probably come to the conclusion that hyped movie was indeed better (people don’t usually bestow accolades on totally worthless stuff). I think that, at least to some degree, OLTP type work loads on Exadata suffer from the same issue. The expectations are so high for the platform in general that even good to excellent results fall short of the massive expectations that have been created based on the some of the impressive results with Data Warehouse type work loads. But that doesn’t mean that the platform is not capable of matching the performance of any other platform you could build at a similar price point.

- I don’t think I’ve ever seen a true OLTP workload. That is to say, I can’t recall ever looking at a system that didn’t have some long running reporting component or batch process that does not fall into the simple single block access (OLTP) category. So I believe that the vast majority of systems categorized as OLTP should more correctly be called “mixed” workloads. In these types of systems, the offloading capability of Exadata can certainly make a big difference for the long running components of the system, but also can improve performance on the single-block access stuff by reducing the contention for resources caused by the long running queries that are unavoidable on standard Oracle architecture.

- Very few of the Exadata systems we’ve worked on over the last few years are supporting a single application or even a single database. Consolidation has become the name of the game for many (maybe most) Exadata implementations. I did a presentation at last year’s Hotsos Symposium where I compiled statistics from 51 Exadatas that we had worked on. 67% were being used as consolidation platforms. This makes it even more likely that an OLTP type workload will benefit from running on the Exadata platform.

So does Exadata run stand alone “pure OLTP” workloads 10X faster than any other standard Oracle based system you could build yourself?

No it does not.

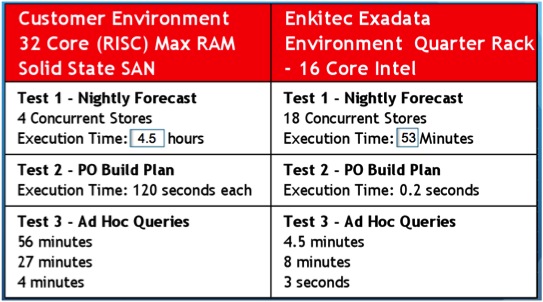

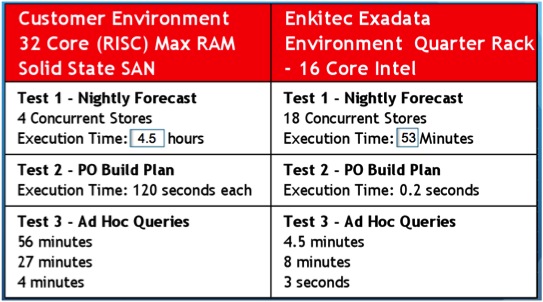

But it does work as well as almost any system you could build, regardless of how much money you spend on the components. By way of proof I’ll tell you a story about a system that we benchmarked on an Exadata V2 quarter rack system. The benchmark was on a batch process that updated well over a billion records, one row at a time, via an index. The system we were comparing against was an M5000 / Solaris system with 32 cores and all data was stored on an SSD SAN. The benchmark showed Exadata to be a little over 4 times faster. This was primarily because most of the work was logical i/o that was serviced by the buffer cache on both platforms. The faster CPUs in the Exadata accounted for most of the gains. Nevertheless, the system was not migrated to an Exadata. A new system was built using a faster Intel-based server which made up the CPU speed difference (and in fact exceeded specs on the V2) and a more capabile SSD based SAN was installed. The resulting system ran the benchmark in about the same time as the original V2 quarter rack (actually it was very slightly faster). Unfortunately the SAN alone cost more than the Exadata. And the real life system also did a bunch of other stuff like some long running ad hoc queries. Guess which platform dealt with those better. 😉 Here’s a slide that summarizes some of the results.

In fairness, I should point out that there is a subset of “OLTP” workloads that are very write intensive. Since writes to data in Oracle are usually asynchronous, while writes to log files usually must complete before a transaction can complete, it’s usually writes to log files that are the bottle neck in these types of systems. However, if the synchronous log file writes can be avoided (or optimized), much higher transaction rates can occur and writes to DB files can become a bottle neck. In those cases, pure write IOPS can be a limiting factor. My opinion is that such systems are relatively rare. But they do exist. Exadata is not currently the best possible option for these extremely write intensive workloads. I say currently because at the time of this writing the storage software does not include any sort of write back cache for buffering writes to data files. However, this is a feature that is expected to be released in the near future. 😉

So that’s it for the OLTP topic.

Stay tuned for Part II, where I’ll discuss another general impression with which I didn’t really agree…